“We choose to go to the moon in this decade, and do the other things, not because they are easy, but because they are hard” – JFK

It is no secret that we are big fans of the use of randomised controlled trials as a means of working out whether a policy is working. RCTs represents the “gold standard” in evidence based policymaking and, as we have argued previously, are a lot less difficult to run than you might think.

This is not to say that RCTs are easy, or that all RCTs are equally complicated. Some interventions are harder to evaluate than others and these are often more costly, complex ones. This is something that we’ve been thinking about recently whilst writing a report for the Education Endowment Foundation, published last week, on the evaluation of complex whole school interventions.

In the report, collaboratively written by UCL Institute of Education, Education Datalab, and the Behavioural Insights Team, we outline some of the methodological challenges associated with evaluating complex whole school interventions (CWSI). These interventions may take years to fully bed in, especially when they aim to alter the functioning of the (school) system. They are often complex in the sense that it may be hard to ex-ante predict what outcomes we should evaluate our intervention on. For example, a whole school intervention may consist of several intervention components that interact with one another or the implementation context. As well as advice on how RCTs can be implemented under these difficult circumstances, there is also a section on how to use quasi-experimental methods to evaluate complex interventions where an RCT is not possible.

What is a Trial Protocol?

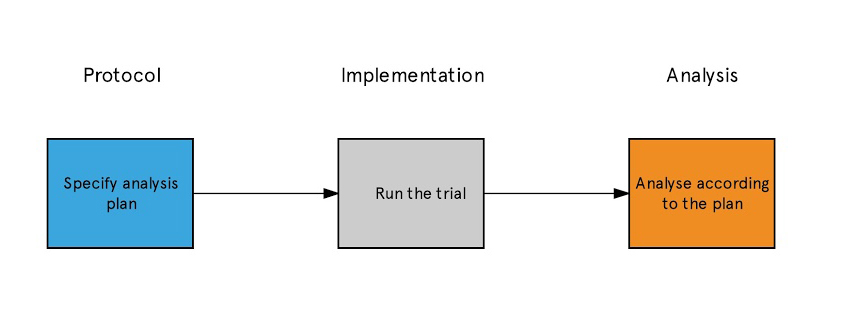

All well-designed randomised studies have a trial protocol – a document that outlines the design of the trial, as well as our hypotheses, and provides detail on analysis we will conduct to verify whether an intervention has worked. The protocol is written in advance of the trial being run, and so prevents us from engaging in questionable research practices – choosing our analysis strategy to give us the result that we want.

The problem with Trial Protocols

However, this process can sometimes seem too restrictive.

In a good large scale trial, we’ll often conduct a process evaluation alongside the main trial – drawing insights both from qualitative research and from other data gathered during the trial to help us look inside the “black box” of an intervention into how and why it seems to be working. Very often, these can suggest alternative hypotheses about how the intervention works, and which groups are the most likely to benefit from it.

After the trial, we are free to analyse the data with these in mind – to understand whether an intervention has a larger effect on families with previous exposure to social care, for example.

But because we didn’t set out these hypotheses before we started analysis, we should treat this analysis as exploratory – and hence of less value than had it been specified in the protocol. Exploratory analyses should generally serve as a starting point for future research, not conclusive in their own right. Whole school interventions seldom take less than a few years from start to finish, so only using this analysis to inform the next stage we may still be years away from is far from ideal. We would need to run a whole new trial to properly test these new hypotheses, setting them out in a new trial protocol of their own and then running the new trial. This is likely to take a similar amount of time to the initial research.

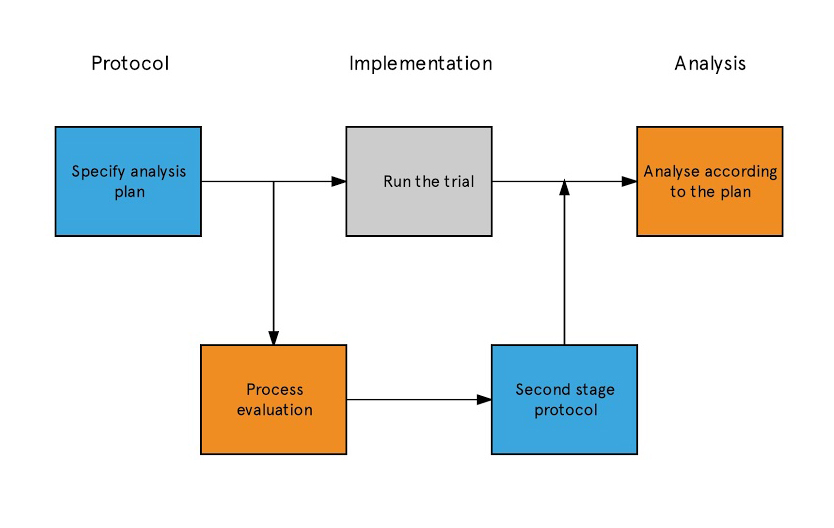

Two stage protocol

The two stage protocol is designed to overcome this issue. As usual, a trial protocol is written before the trial, and the trial is conducted, alongside which a process evaluation takes place. However, before the outcome data is available to researchers (ensuring the principle of only making hypotheses before analysis starts is strictly maintained), the insights from the process evaluation are drawn out during a holding phase. Any hypotheses that emerge from this are then written into the second stage of the protocol, which is finalised and published before the final data is analysed, and so can be included in the main results without concern that researchers have altered their analysis strategy to find evidence for their hypotheses.

Complex whole school interventions are most challenging to evaluate but ultimately can reap the greatest rewards and catalyse the greatest shifts in a young person’s experience of education. However, they do not fit easily into the traditional model of trial protocols, hence the need for a bit of adaptation. We think that this two stage process offers a pragmatic solution to analysing hypotheses which emerge during the course of the trial, without sacrificing the rigour of the evaluation. In the long term, improving our understanding of how this works will be of benefit to education leaders, teachers and students across the country.