Why are eye movements important for behaviour?

It has been said that eyes are the windows to the soul. But for BIT, eyes are better thought of as the doorways to behaviour. This is because looking at things is one of the most obvious ways to understand attention, and attention itself is one of the most important aspects of understanding behaviour.

Eye movements can reveal a person’s emotions, intentions, and cognitive processes. For example, psychology research has shown that specific eye movements can be linked to accessing memories, even when people themselves say they can’t remember something! Eye movements are also related to decision making, as they can reveal what someone considers attractive (i.e. the ‘A’ in EAST). When someone’s gaze is manipulated – eye movements can even actively increase the value people place on choices. This makes eye-tracking data very useful for understanding why people behave the way they do.

However, to collect eye-tracking data requires expensive equipment, considerable expertise, and a fair amount of patience (see the photo of a traditional eye-tracking device below). These barriers mean that eye-tracking data is not used frequently in behavioural science. But what if there was a shortcut to achieve some of the insights of eye-tracking, without the costs?

An alternative to physical eye-tracking

Simulated eye-tracking refers to the process of using software to predict where a person’s eyes are likely to be looking as they interact with images, without actually using an eye-tracking device. This can provide valuable insights into how people engage with things they can see, without the time, expense, and expertise needed to set up, collect, and analyse real eye-tracking data.

Visual neuroscience has been developing these kinds of algorithms – trained and validated using real-world data – to predict where people will look at an image since the 1990s. However, recent advances in machine learning have made these algorithms much more accurate. Researchers even enter their algorithms in competitions, where they often come close to the accuracy seen with real human observers. These algorithms take an image as input (e.g. a website, screenshot, or digital photograph), and output a predicted salience map which, when overlaid on the original image, shows which areas on the image are likely to attract the most attention. Here’s an example of a salience heatmap, where the “hot spots” correspond to where people are most likely to look:

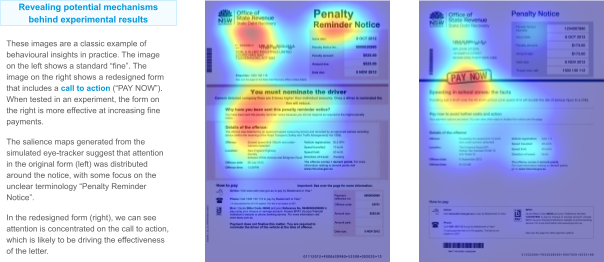

This example comes from a trial we ran back in 2014, where we wanted to draw attention to a call to action (the “PAY NOW” stamp), that was added to penalty notices and penalty reminder notices. Compared to the control notice, the trial notice resulted in a 3.1 percentage point increase in fine payments, which has likely saved millions of dollars in enforcement fees and printing costs. The salience map generated for the notice shows why this worked so well; attention in the control notice is spread across a number of different elements, while in the trial notice, attention is almost fully focused on the call to action.

Apart from helping to explain why certain interventions can work, simulated eye-tracking can help inform design decisions in a wide range of situations by:

- Making sure critical information is prioritised;

- Identifying distracting content;

- Comparing competing visual elements;

- Improving accessibility in online environments;

- Figuring out where best to deploy visual materials in the real-world;

- Communicating how attention works.

It is best used alongside other research methods (e.g. user testing, A/B tests) to provide a more complete and accurate understanding of how people interact with visual material.

Simulated eye-tracking in practice

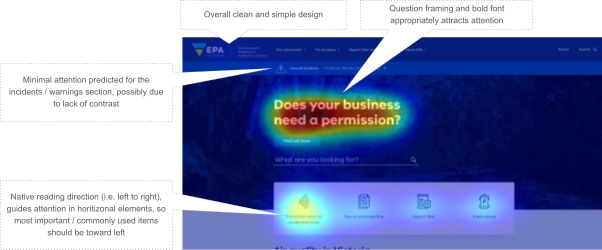

The BIT Australia team has used simulated eye-tracking to help with a number of projects. In one case, we used simulated eye-tracking to assess a previous version of the website of Victoria’s Environment Protection Authority (EPA). We were able to validate a number of design choices (e.g. the use of larger font, and human faces in images), as well as identifying areas that could be improved (prioritising information according to reading direction).

In another project working with Transport for New South Wales, we used simulated eye tracking to inform small design edits of behaviourally-informed visual resources. In this case, we generated salience maps for different versions of posters and lanyards (which were aimed at improving employee engagement with resources around injury management and workers compensation). This helped understand whether attention was drawn to the core messages. When we tested these images with actual users, many of the things they said agreed with the simulated eye-tracking results.

The limits of simulated eye-tracking

It’s important to mention that while these predictions are convenient, simulated eye-tracking will always be less accurate than real data. There may be differences in eye movement patterns, visual impairments, external factors (such as lighting or environmental conditions) that mean that the predictions are not accurate. Simulated eye-tracking also can’t yet capture the dynamics of eye movements, like the order that people look at things or how long they spend looking.

But having an approximate insight into how attention works, and being able to communicate this using easy-to-understand salience maps, means that simulated eye-tracking is an innovative and powerful addition to any behavioural research toolkit. Combined with other behavioural insights methodologies, simulated eye-tracking offers a modern approach to research, understand, and influence human behaviour.

Want to find out more? Get in touch with bowen.fung@bi.team.