Here’s a reasonable question: why put multiple people on a task when you can just get one really smart person to do all the work?

Put simply, because the group’s probably going to do a better job.

The history behind the science

One hundred years ago, British statistician Francis Galton was on a mission. At a time when the principle of one-person-one-vote was spreading, Galton was a staunch believer that society was too important to be left to the unwashed masses. Having spent much of his life trying to prove that intelligence exists in but a few, he wanted to settle once and for all that most of us are, well, stupid.

And in a rather unlikely environment he saw an opportunity to do just that. At a county fair in Plymouth in Southern England, a motley crew of townsfolk were milling around trying to guess the weight of an ox. Eight hundred people put in a bet – from ‘experts’ like butchers and farmers, to lay people. Galton took all the estimates scrawled on pieces of paper and ran some basic analysis. His findings were remarkable: contrary to his hypotheses (and hopes), the experts didn’t do particularly well.

But it turns out the crowd was pretty smart.

Taken together, the average of the group’s estimates was 1,197 pounds. The actual weight of the ox? 1,198 pounds. The crowd was off by less than a pound, or just 0.08%. Ever the empiricist, Galton reluctantly concluded that: “the result seems more creditable to the trustworthiness of a democratic judgment than might have been expected.” (quoted in Surowiecki 2004, pg. xiii)

This became the first in a series of experiments which found that under the right conditions, you get more accurate results if you simply aggregate the views of a group of people rather than rely on any single individual – even someone with expertise in the area (Tetlock 2006).

Why?

Because people look at problems differently, so pooling multiple perspectives reduces the risk that decisions only reflect one interpretation of the world.

To illustrate, let’s take a common prediction problem: what will happen to inflation?

Ask an economist and they’ll probably give you an estimate based on what consumer markets seem to be doing and the latest unemployment figures, combined with what their training tells them about how these variables reflect both the real economy and expectations. This could end up being accurate; though the evidence isn’t particularly encouraging (in fact, recent analysis showed 98% of economists got it quite wrong (Zweig 2015)).

But what if you combined their response with the views of a small business owner whose primary market is overseas? They see fluctuations in trade patterns in their industry, feel the impact of oil price shocks, and they’ve possibly made an investment decision recently which required them to take a punt on how their business was going to grow in the future. Their estimate would reflect a different view on the world, and importantly, different information.

And what if you also added the guess of a financially stretched stay-at-home parent? They’re likely to give you an answer that reflects their lived experience balancing the household budget, what seems to have gotten expensive recently and how far they have to go to get a bargain.

A growing body of research indicates that the collective responses of these three people will do a better job at estimating inflation than just leaving it to the (expert) economist alone (Tetlock & Gardner 2014).

So you’re telling me expertise is irrelevant?

Not quite. Getting a good education is still worth it (even if it doesn’t make you better at predicting inflation!). But expertise can be overstated.

Studies have shown that crowds will beat experts when they are:

- Diverse – meaning they bring varying degrees of knowledge and insight;

- Independent – that is, individuals’ opinions aren’t affected by those around them;

- Decentralised – meaning they are able to specialise and draw on local knowledge; and

- Aggregated – which is to say there’s a mechanism for collecting views and converting them into collective intelligence.

When these conditions hold, there are very few experts who will outperform the group. In fact, researchers have even shown that US defence intelligence analysts with access to classified information can be beaten by some rudimentarily-educated amateurs: largely because they come to conclusions too quickly and struggle to update their opinions in the face of new and conflicting information (Tetlock & Gardner 2014).

OK fine, you’ve convinced me that crowds can be wise, but what does any of this mean for recruitment?

We live in the age of the knowledge economy where the competition for talent is fierce.

Organisations spend eye-watering sums trying to attract the best talent because in many industries, the difference between the best and the good has real implications for the bottom line (*Bock 2015).

But at the same time, studies have revealed that many hiring decisions are made on impulsive assessments taken at face value. Sometimes this is explicit, like when firms only hire from certain universities. Often it’s also implicit, like when we unconsciously discard CVs based solely on gender, sexuality, or ethnicity (Mishel 2016; Oreopoulos 2011; Booth & Leigh 2010; Wood et al 2009; Bertrand & Mullainathan 2004).

The upshot for firms is that their teams are not only less diverse, but also poorer performing. Diversity is increasingly recognised as a key ingredient to success (Phillips 2014). More diverse groups have been found to process information more deeply and accurately, be more innovative, have greater economic prosperity, price assets more accurately, and in the case of juries, consider perspectively more thoroughly and make fewer inaccurate statements (Galinsky et al 2015).

This seems like a mismatch: there are clear societal and individual gains to be had from diversity and yet hiring practices often deliver the opposite. With the 19th century methods of CV hiring, we in BIT were finding it hard to apply what we knew from the research to our own recruitment.

So, BIT, in partnership with Nesta, has spent the past year cooking up a tool – Applied – that aims to help organisations learn from the science and make smarter, fairer hiring decisions.

In doing so we wanted to know if the promise of a wise crowd might be part of the answer. So before trialling it on real people, we tested it.

When does a crowd get ‘wise’ for recruitment?: An experiment

Through a simple online experiment we asked 398 reviewers to rate the responses of four hypothetical (unnamed) candidates to a generic recruiting question (“Tell me about a time when you used your initiative to resolve a difficult situation?”).

Unsurprisingly, their combined ratings easily identified the best response. But most organisations can’t afford to ask 400 people to help them select a candidate. The real question is: at what point does the crowd get ‘wise’?

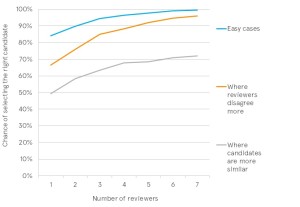

We took our data and ran statistical simulations to estimate the probability that different groups could correctly select the best candidate. We created 1,000 combinations of reviewers in teams of different sizes, ranging from one to seven people. We then pooled them by the size of the group and averaged their chance of selecting the right candidate.

Figure 1 below confirms that with more people, you are more likely to correctly identify the best person. Or, put another way, with more people you’re less likely to accidentally chuck out your best candidate.

The blue line in Figure 1 shows that under ‘easy’ conditions (that is, where there’s a reasonable gap in quality between the best and the second best responses), there’s a 16% chance that a person working on their own will select the wrong person. With a group of three, however, that falls to 6%, and by five, you can be pretty much certain they won’t make a mistake (1%).

But what about where people disagree more over what ‘good’ looks like? That’s what the orange line shows (we artificially increased the variance in scores). Where reviewers disagree more on a given candidate you need to pool more opinions to gain the same level of judgmental accuracy. Moving from one to three reviewers has a big impact: you go from a one in three chance of getting it wrong to 15%.

Finally, what about situations where candidates are really similar, and it’s hard to distinguish between them? The grey line reveals what we found when we tested the crowd’s ability to separate the second and third best candidates (whose responses were similarly graded). We found crowds are even more important here, and one person working on their own can be trusted no more than a coin toss!

Since we had a diverse sample, we were also able to test if different people do better. We found no significant differences between genders or by level of education. But older people tend to be a bit harsher in their ratings.

So where have we ended up? While we’re continuing to run experiments, for Applied, we have settled on three reviewers as the optimal crowd for recruitment.

Three balances the gains from getting an additional perspective with the cost of asking for another person’s time. In instances where we see lots of disagreement between reviewers, the tool will prompt for a fourth perspective. And if it’s difficult to distinguish between people, these results suggest you might want to be a little more generous with how many you take through to the next round, or get a few more eyes on the problem.

For a peek under the hood of other ways we are improving how to recruit, check out the Applied website.

———————————————————————————————————————————–

*Bock, L., (2015), Work Rules: Insights from inside Google that will transform how you live and lead, Twelve, USA. Interestingly, our findings on when the recruitment crowd becomes wise are in line with similar analyses by Google on their own processes. Just one Googler is better than the crowd at identifying the best person, and for Google, 4 reviewers is optimum.