We know that practical experience, or learning-by-doing, is a powerful method for learning. When we run training programmes, we want to make sure that every learner walks away with a deep understanding not only of what was on our slides, but more importantly, of the underlying principles and how to apply them to real-world problems.

Learning by doing with SkillsFuture Singapore

This motivation drove our collaboration with SkillsFuture Singapore (SSG), to run a Behavioural Insights (BI) hackathon. SSG drives and coordinates the implementation of the national SkillsFuture initiative which promotes holistic lifelong learning by supporting an ecosystem of quality education and training in Singapore.

The hackathon was designed with the objective of upskilling SSG staff in BI research and evaluation which then encourages the application of BI in their mission towards national skills development and mastery.

Hackathon projects at a glance

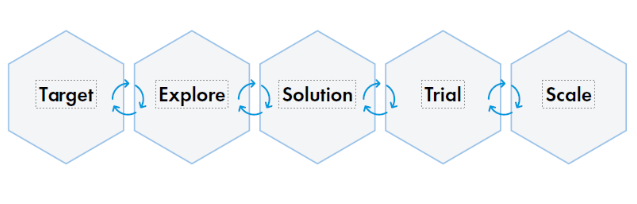

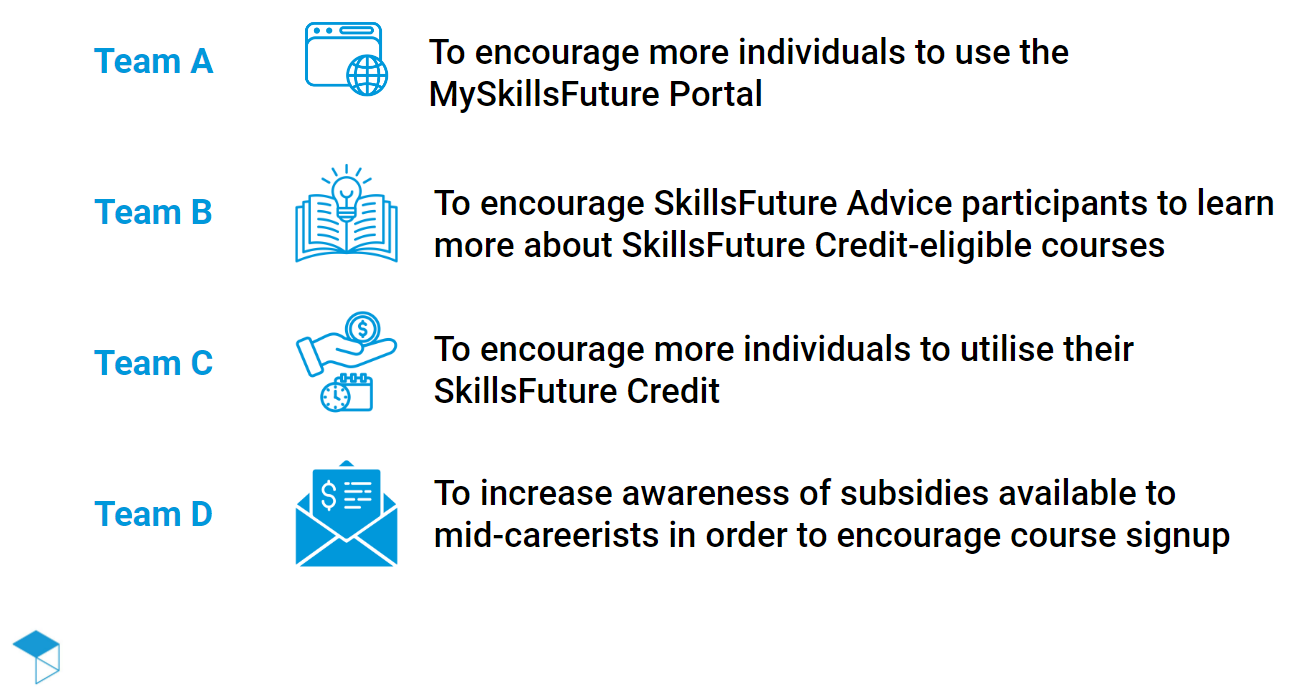

Over the course of seven months, 22 hackathon participants across four teams practised running their own BI project from end-to-end, with close guidance from BIT consultants, using BIT’s TESTS methodology to structure their projects.

Over the course of seven months, 22 hackathon participants across four teams practised running their own BI project from end-to-end, with close guidance from BIT consultants, using BIT’s TESTS methodology to structure their projects.

What were some of the main lessons learnt?

| With proper targeting, different business challenges can benefit from the application of BI. |

| Doing it yourself is the best teacher. |

| The best solutions are often pragmatic and address an identified barrier. |

| You can run a trial within six months. |

| Careful thinking needs to go into deciding whether to scale up a successful intervention. |

With proper targeting, different business challenges can benefit from the application of BI. Teams brought to the hackathon a diverse set of challenges; some were related to a lack of awareness, others had to do with external resources. In the beginning, it was not clear if all the challenges would be amenable to a behavioural approach.

However, in the Target phase, BIT provided participants with the frameworks and structures necessary to scope their challenges more concretely. For example, how can you make your problem statement S.M.A.R.T. (Specific, Measurable, Assignable, Realistic, Timely)? We scaffolded teams as they explored metrics they could track and observe — click-through rates, usage, QR code scans, etc.

With a keener focus on a behavioural outcome, the teams were better equipped to approach their business challenges from a BI perspective. In the words of one of the team members, one of the things they found valuable was “defining a project in terms of exactly what you are trying to solve and whether it is measurable.”

Doing it yourself is the best teacher. The teams brought challenges that they experienced as part of their job. Unlike a generic workshop which teaches frameworks decontextualised from the real world, the hackathon provided an opportunity for participants to directly apply BI on actual projects.

For instance, in the Explore phase, the teams conducted their own primary research and fieldwork, reviewed past studies, analysed historical data, and conducted brief literature reviews.

Secondary research alone does not enable deep understanding of audience which the participants learnt firsthand. Teams found that direct fieldwork (i.e. talking directly to members of their target users through interviews) gave them new insights to their initial assumptions. A participant provided this piece of feedback, “actual attitudes and actions of our target audience can sometimes differ from our initial hypothesis.” while another participant said that the Explore work provided “deep understanding on the customers to prove or disprove some of your hypotheses.”

The best solutions are often pragmatic and address an identified barrier. Often, we are in a hurry to jump straight into designing a Solution after learning BI. However, participants were taught to first make sure these solutions are focused on a barrier or set of barriers; this ensures that it is relevant and contextualised to the organisation’s needs. An intervention is not an effective one if it does not address the heart of the problem.

As a result of a thorough Explore phase, teams were then able to design the best intervention(s) to address the gaps they found. For instance, through the conduct of exploratory interviews, the team working on SkillsFuture Credit utilisation collected feedback from recent and potential letter recipients suggesting that the existing letter could be made simpler and clearer. This feedback directly helped the team to redesign the letter.

Teams also learned to be pragmatic when designing their solutions. As an organisation that started out in the government, BIT understands the context within which public agencies make decisions; we are aware that for a solution to be impactful, it needs to be both effective and practical. Teams went through an impact-feasibility assessment, where they evaluated the extent to which their solution will be both effective as well as operationally viable. As one participant reflected, “[I learned the] importance of balancing feasible and impactful solutions. That trade-off is sometimes necessary.”

You can run a trial within six months. Whenever we roll out a new policy or intervention, it is always important to demonstrate that it works. Running a Trial allows us to determine:

- The efficacy of the revised BI version as compared to the business-as-usual version;

- If there are any backfire effect from implementing the BI version before officially rolling out; and

- Whether there might be unintended consequences resulting from the BI version

Despite the tight timeline and fairly scoped target statements, three out of the four teams were able to run RCTs– via an email trial, a letter trial, and a social media advertisement trial.

When a straightforward RCT was not possible, we guided one of the teams to conduct a stepped-wedge cluster randomised trial where we looked at how an intervention was rolled out across various sites one at a time, with sites acting as controls for previous ones.

Careful thinking needs to go into deciding whether to scale a successful intervention. The team working on increasing usage to the MySkillsFuture portal decided to Scale their successful email intervention to over 900,000 recipients on their mailing list. This decision was made after a careful analysis of what the trial results would translate into if rolled out in the real world. Our calculations indicate that scaling up the most effective treatment arm to the whole mailing list would lead to 9,900 more recipients opening the email and 15,200 unique click-throughs.

Comparing this real-world outcome to the relative effort required to scale, the team concluded that scaling would be a worthwhile next step.

However, this might not always be the case. We often caution partners to not assume that scaling up is an automatic decision after a successful intervention; as pragmatic behavioural scientists, we always advise partners to first compare the magnitude of success with the effort of implementation. Only if the former outweighs the latter– or if there are other positive externalities– should they decide to bring something to scale.

Conclusion

A behavioural insights hackathon is an efficient and effective way to allow BI practitioners an opportunity to get their feet wet, and to learn how to apply a behavioural lens to developing and evaluating innovations in their work.