‘‘AI won’t take your job, someone using AI will”. Or so say the AI influencers at least. At BIT we’re thinking hard about how we should be using artificial intelligence (AI).

We’re not worried that it’ll do us out of business, not yet at least. Rather, we’re excited about the potential to dramatically increase the scale, efficiency and effectiveness of applied behavioural science. We’re doing a lot already and we have more planned for this year.

So what are these AI methods and tools, how are we putting them into action and what do we have planned ahead?

1: More effective AI-enabled behavioural interventions

As anyone from BIT will tell you, an effective behavioural intervention makes something ‘Easy’, ‘Attractive’, ‘Social’ and ‘Timely’ (or EAST). Many successful interventions are based on simplifying processes and information making it easier for people to take action.

There is huge potential in using AI to augment behavioural interventions making them more effective. AI can perform cognitively demanding tasks in an instant, engage as authentically as a real person and is available at the touch of a button when you need it.

An obvious use case is helping people find and interpret information. People spend a lot of time trying to figure out if they’re eligible for a benefit, how much tax they need to pay or getting health advice.

We recently ran an experiment to test whether and how people would use AI chatbots in public services. You can see one of those bots in action below.

Beyond the mundane but important task of navigating government advice, digital interventions can also tackle the thorniest challenges. For example, we found that an interactive Whatsapp Chatbot intervention reduced exposure to intimate partner violence (IPV) among young women in South Africa.

Beyond the mundane but important task of navigating government advice, digital interventions can also tackle the thorniest challenges. For example, we found that an interactive Whatsapp Chatbot intervention reduced exposure to intimate partner violence (IPV) among young women in South Africa.

What’s next? We expect to be developing and testing more of these digital, AI enabled interventions over the coming year.

2: Qualitative research, at quantitative scale

It’s glib, but generally true, that quant data gives you breadth while qual gives you depth. Surveys and observational data provide limited information about a lot of people. Interviews give a lot of information from a small sample. But can AI let us have our qualitative cake and eat it? Can it bridge the gap between qual and quant, creating a new hybrid space of ‘quant with depth’ or ‘qual at scale’ depending on your perspective?

In an early demonstration of this we analysed 3000 free text answers from participants in a recent online experiment. The experiment tested safer-gambling interventions. The AI analysis (pages 45 – 47 of the report) allowed us to identify insights from the free text that would otherwise be lost. For example, the AI grouped together ‘suggestions for improvement’ helping us identify ideas such as a gambling spending block that requires contacting your bank to unblock.

A step further is AI actually carrying out qual research. This academic study ran almost 400 interviews with real people using an AI chatbot. Not only did they gather interesting data at scale, participants liked the experience!

What’s next? We’re currently working with our sister organisation Nesta to further test AI qualitative research tools.

3: Simulating attitudes and behaviour with AI agents

So AI can supplement traditional research, but can it replace human participants altogether? Researchers have been able to replicate political survey data and experimental psychology results by using ‘simulated AI participants’. We’ve also done our own exploratory project testing how well AI simulated surveys can represent cross-cultural values using the World Values Survey.

While still a nascent approach, there’s the potential to answer research questions that are otherwise extremely challenging methodologically. We’re particularly interested in research by Chris Bail and colleagues simulating disinformation and toxicity on social media platforms and Stuart Mill’s use of generative AI to navigate online user journeys and identify ‘dark patterns’ such as subscription traps.

What’s next? We’re delivering a project testing the accuracy of AI simulated surveying.

4: Unlocking the value in unstructured text data

Lots of the most important public service data is text. For example, reviews, complaints, case notes etc. At BIT, we’ve done numerous projects demonstrating this value using researchers to code data. For example, coding homicide case files.

Thankfully, there aren’t that many murders each year so human coding is feasible. But what about bicycle or mobile phone thefts? Or hospital complaints. There’s no way that researchers can analyse these volumes of data.

As a proof of concept we’ve used AI to analyse some publicly available coroner’s reports. Of course, lots of the most valuable text data is also very sensitive (e.g. crime reports) which presents challenges for using these tools.

What’s next? We’ll be exploring how we can use locally run AI models or more traditional NLP methods to work with sensitive text data.

5: Tools to help behavioural scientists

EAST, COM-B, ISM etc. … behavioural scientists do love to apply a framework. But, if an AI model can read an x-ray as well as a radiologist, can AI run a COM-B analysis as well as a behavioural scientist?

We’ve built a proof of concept tool for applying EAST and COM-B (see below). Our experience so far is that the AI models are effective at listing all of the more obvious barriers and solutions. Sometimes, this is really helpful and saves time, but it’s probably not giving you the killer behavioural insight.

What’s next? We are integrating these AI tools to ensure we quickly hit the ‘standard’ barriers and solutions, enabling the team to jump straight to the more tailored and creative insights.

6: Analysing images at scale

Beyond text analysis, we’ve been very impressed with AI image analysis. This is another area that has traditionally relied on human researchers to review and analyse.

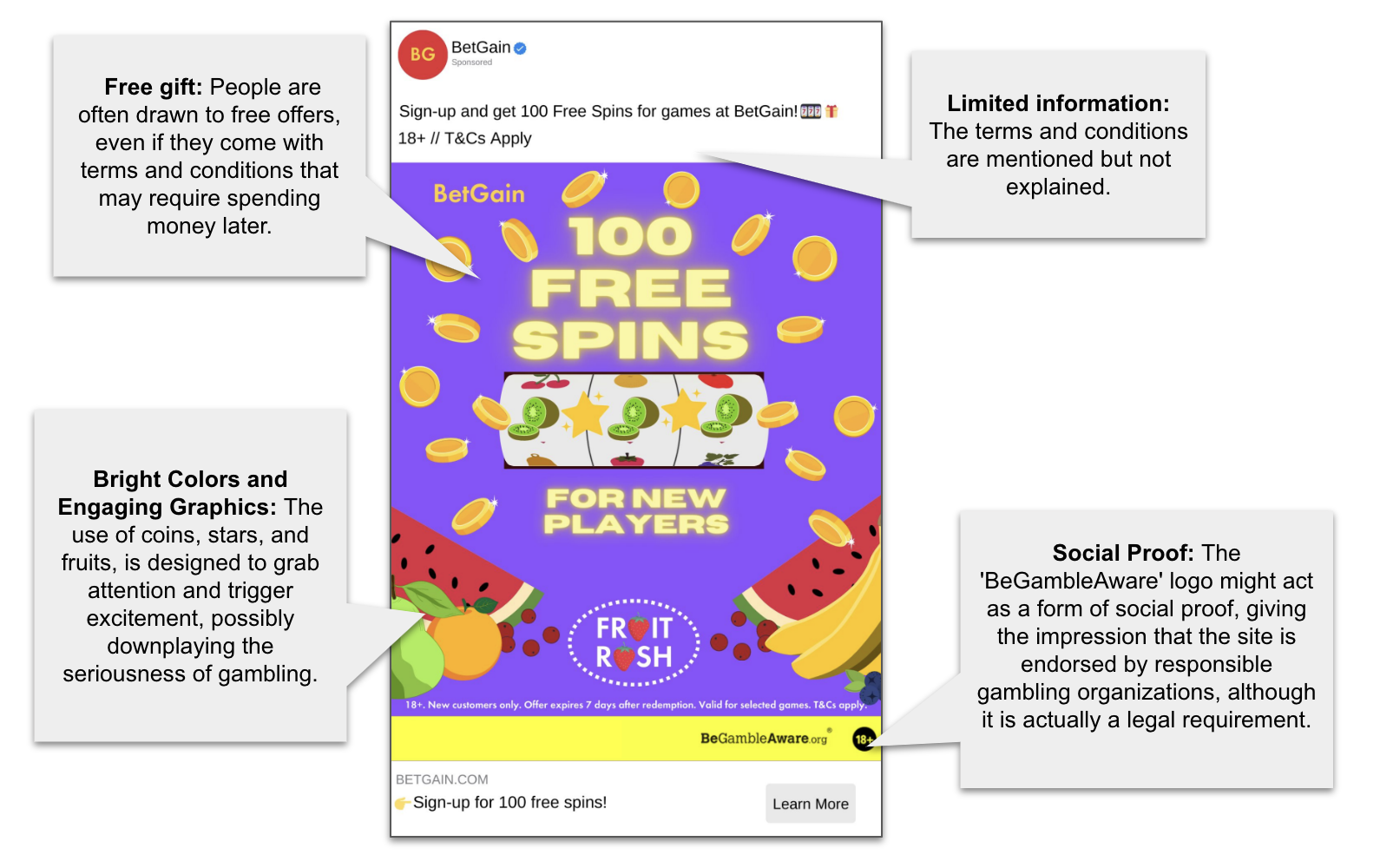

For example, we gave ChatGPT vision an image of a mockup gambling ad along with the prompt to identify the ‘dark patterns’ in the ad. Here’s what it came up with.

Taken to scale, methods like this would allow us to analyse 1000s of ads or other images which would otherwise be impossible. It could also be done on an ongoing basis when ads are launched helping regulators to monitor advertising standards and trends.

What’s next? We’re excited to add this into our methodological toolkit this year.

7: AI tools to reduce the burden on frontline practitioners

Teachers, police officers, social workers, they could all do with tools to reduce their workload so they can spend more time with that child or vulnerable person who needs their support. AI tools could do that, for example it’s exciting to see Oak National Academy developing tools like AI lesson planners for teachers.

Our colleagues at Nesta have developed prototype AI tools to help early years practitioners such as an activity generator, see the demo below.

Interventions for frontline staff that speed up routine,and frustrating, tasks can improve public sector productivity and also improve morale by freeing up time for staff for more complex cases.

What’s next? We’re aiming to work with a frontline organisation this year to develop similar tools.

8: Integrating AI and online experiments

Over the coming year we will be further developing our online experiments platform, Predictiv.

The recent ChatGov experiment was our first time integrating an AI tool into the platform and evaluating how AI can ‘uplift’ human performance. We can now compare humans to ‘humans & AI’ in our experimental design.

We also want to get more free text data from participants in online experiments and extract value from it. This research from Google showed that a simple chat interface in a survey got twice as many words of responses than a simple open text box.

What’s next? This year we’ll be piloting the use of simple chat interactions to get more information from people and expanding our use of AI in analysis workflows to analyse the text.

Looking ahead

Over the coming year, we’ll be sharing updates on many of these strands, and probably others that we can’t even envisage right now.

We expect how we do applied behavioural science to evolve, enabling us to have greater impact at scale.

We’d love to hear from anyone excited about any of the work and thinking we’ve set out above, and who would like to collaborate on a project to test some of these ideas to solve real world problems.

If you’re interested in talking further to us about this work, do reach out directly to Edward.Flahavan@bi.team